Have you ever read an article on Wikipedia and wondered if the article was more accurate or syntactically correct than if the article had been written by an individual or small team? I have, and this curiosity led me to ask a question [many questions in reality]. This paper is a demonstration of both the scientific method and of applying text mining to answer the question and test the hypothesis. The layout of this paper is rather untraditional in that it will mirror the steps of the scientific method nearly one-for-one. The question asked is how does the percentage of misspellings on Wikipedia, relative to total content, change through time?

## Table of Contents

* [The Questions](#the_questions)

* [Hypothesis](#hypothesis)

* [Experiment](#experiment)

* [Results](#results)

* [Findings](#findings)

* [Appendices and References](#appendices_and_references)

## The Question {#the_questions}

When an article is published in a journal, or a book is published, it typically goes through several rounds of professional type editing. During this process, experts comb through the book to correct misspellings and grammar mistakes. Sometimes this step is performed by a single person. At other times it is performed by a small team. Ideally, the end product is a finely polished publication with no mistakes.

In the case of Wikipedia, an article can be edited by anyone. Hundreds and even thousands of people can edit a single page through its lifetime for both content and syntax reasons. Wikipedia is perhaps the best known example of crowd sourcing in that regard. How does this affect the percentage of misspellings on Wikipedia articles through time, relative to total content? Does the percentage of misspellings increase over time as new content is added? Does the percentage of misspellings decrease over time as more errors are caught and freely corrected by anonymous editors?

## Hypothesis {#hypothesis}

_I hypothesize that the percentage of misspellings on Wikipedia articles through time, relative to total content, remains steady._ The idea is that new misspellings are introduced through the introduction of entirely new and niche articles. New errors can also be introduced through the very act of correcting older errors. At the same time, existing content is refined and errors corrected.

Imagine this as a large machine with an input and an output. Going into the machine is new content with new [and existing] errors. Coming out of the machine is refined language. Both the input and output of the machine are the products of different individuals, but the machine itself consists of the masses. As a result, the machine does not act in a linear or progressive fashion. The input will frequently be disconnected from the resulting refined output. If the machine were frozen at various points in time, the inputs and output would rarely align. Finally, the very act of producing the refined content requires it to become input once again, and thus increase the probability of creating new errors.

## Experiment {#experiment}

To test the hypothesis, a simple text mining application was built to check the spelling of Wikipedia articles. The application performs 3 simple steps:

* Randomly select and retrieve an article.

* Check the spelling of the article contents at various points in time.

* Record the results for further analysis and aggregation.

Retrieving a random article is easy, but how do we check the spelling of the content? This is a very difficult question to answer. Have you ever wondered how many words there are in the English language? Not even the Oxford dictionary knows the answer to that question.

> It is impossible to count the number of words in a language, because it’s so hard to decide what actually counts as a word. . . . is dog-tired a word, or just two other words joined together? Is hot dog really two words, since it might also be written as hot-dog or even hotdog (“How Many Words . . .”)?

The solution selected for this test was to generate a dictionary of “valid” English words. This dictionary is called the valid word dictionary throughout the rest of this paper. If a word on a Wikipedia page was not contained within the dictionary, then it was counted as a misspelled word.

### Compilation of the Valid Word Dictionary

Initially, only the _12dicts_ word list created by Kevin Atkinson was used (Atkinson). Creating the dictionary required processing multiple text files and weeding out duplicate words. The final dictionary contained just over 111,000 words. Initial samples were showing ~12% misspelling rates with most, if not all, false positives. This was in part because the 12dict word lists does not contain proper nouns. Additionally, the validity of some words could be discussed. For example, is “non-microsoft” a valid word? Perhaps the more correct form would be “not microsoft.” Here, you see the complication of defining what is a valid word without context.

To bring the false positive rate down, two additional sources were used to create the final dictionary: SCOWL (Spell Checker Oriented Word Lists) and Wiktionary. The final dictionary consisted of just short of 500,000 words. The application used to generate the final dictionary can be found in the appendices.

### Approach to Random Sampling

The Wikipedia corpus was too large to process within a reasonable amount of time on the hardware available for this research. The entire Wikipedia database download consists of more than 5 terabytes of text (“Wikipedia:Database_download”). To overcome this problem, a random sampling approach was taken.

First, the Wikipedia API is used to request random article titles from the dataset. Next, a query is sent to Wikipedia requesting a listing of all revision metadata for each selected article. Then, the list of revisions for each article is sorted and grouped by year. A random revision within each year subgroup is then selected to serve as the sample. In this way, there is one random sample per year for an article over its lifetime.

To obtain a somewhat representative view of the Wikipedia dataset, 2,400 random articles were used. The number of revision samples obtained varies for each article for two reasons: (1) the article may not have existed yet, or (2) there were no revisions to the article during the year.

### Deconstructing a Wikipedia Page for Processing

The typical Wikipedia page contains a lot of junk. Before an article can be fed to the spellchecker, it must be cleaned. After a sample is retrieved, it is passed to the content processor. The processor first cleans the data and then extracts a list of words to be spellchecked. All markup such as HTML must be removed. Additionally, some content is not relevant or is prone to unique syntax which could erroneously skew the test. As such, three content areas are explicitly excluded from being processed: references, gallery tags, and external links.

The colored transparencies in figure 2 demonstrate what content is included and what is excluded. Areas in red are thrown away. Areas in green are extracted and fed into the word separator which extracts each word that will eventually be spellchecked.

Further post processing is performed on the word list before it is finally spellchecked. This process include throwing away words that cannot be realistically spell checked using a static dictionary. For example, currencies cannot be realistically compared to a dictionary of possible valid values, so they are thrown out. Words which consist of just numbers, such as 1888 are tossed along with numbers that have the trailing letter s [e.g. 1940s].

## Results {#results}

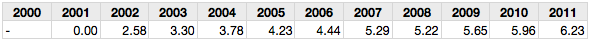

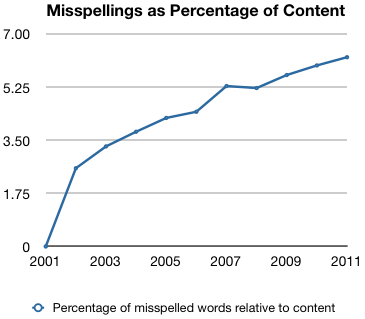

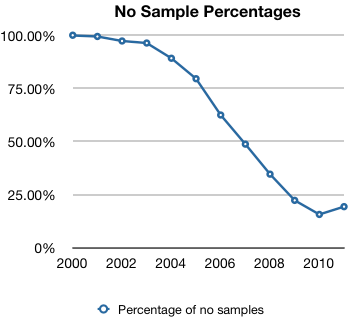

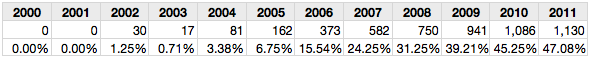

The percentage of words relative to content that were categorized as misspelled increased year over year consistently. Table 1 and graph 1 show this increasing growth.

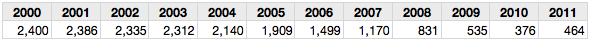

A surprising discovery was that the majority of pages did not have any available samples until the year 2007. This data is shown in table 2 and graph 2. Wikipedia was launched in 2001, so the graph could perhaps indirectly demonstrate the inverse growth rate of content on the Web site. The increase in pages without samples in 2011 can be temporarily attributed to the fact that the test was conducted in September with three full months remaining in the year.

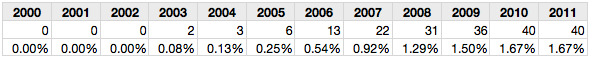

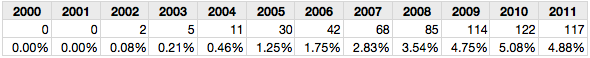

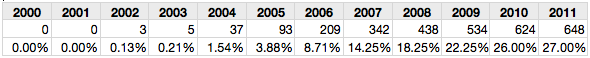

An analysis of potential outliers was conducted to determine potential accuracy rates. Tables 3 through 7 show the number of samples that are above misspelling thresholds of 50%, 25%, 10%, 5%, and 1% respectively. For example, table 3 shows that in the years 2010 and 2011, 40 samples [or 1.67% of the samples] had more than 50% misspellings. The tests show that there are no particular years that are outliers at certain misspelling rate thresholds. The percentage of misspellings increase at every threshold consistently from year to year.

## Findings {#findings}

On the surface, the results of the test seem to indicate that the hypothesis is false. The percentage of misspellings relative to content have consistently increased year over year. However, it is unlikely that the rate of increase is likely to continue. I would expect to see the misspelling rate begin to level off at some point. However, there is not enough time lapse data to notice any such trend as of September 2011. The results of this test must be taken with a grain of salt. Understanding of the tests weaknesses will facilitate proper interpretation of the results.

### Test Weaknesses

The two major weaknesses of the test revolve around two attributes: the use of a limited static valid word dictionary and the fact that the text analyzer itself is very dumb.

Let us first discuss the use of a static valid word dictionary. The global Language Monitor estimates the number of English words to be around 1 million as of the year 2000 (“Number of Words . . .”). That is more than two times the number of words contained in the valid word dictionary. There are also valid words which may not be a part of the English language. For example, references to foreign subjects in which the word is of a different language could be met. On top of this, there are a multitude of syntactically correct forms in which a word may appear. For example, a word may be combined with another word through hyphenation. A word may be in possessive form.

Generation of the valid word dictionary may be more effective by building it from scratch rather than by joining preexisting dictionaries. For example, a larger and modern corpus of English text could be mined for word frequencies. These word frequencies would then determine their inclusion into the dictionary. There are various weaknesses even in this approach, but it may get us one step closer to more accurate results.

The second major weakness is that the text analyzer itself is very dumb. It does not deconstruct a sentence into basic elements. This leads to false positives. A common example of this error can be found with proper nouns which are often excluded from dictionaries. It is possible that a proper noun could be misspelled, but it is more likely to simply not be officially recognized as an English word. The use of frequency metrics when generating the dictionary on a more diverse corpus could be a good area for further research.

The test used in this experiment ignores context, and yet grammar could be a significant determinant of spelling correctness. Introducing more advanced natural language processing to help make smarter decisions, for example, given a word’s grammatical context, could be employed.

### Conclusions

The test does not satisfactorily or definitively answer the hypothesis. A new test should be developed to test the hypothesis again. Ideally, the new test should look at the entire Wikipedia corpus, and not a limited sample. Some articles have hundreds or thousands of revisions within the span of a year, and there is a large degree of variability in the content quality that is not captured in a sampled test.

In sum, the data seems to indicate that the hypothesis is false. The percentage of misspellings relative to content increases over time. It is possible that this conclusion is correct, but the probability of this being true is rather low. The probability is greater that the steadily increasing misspelling rates is the result of increasing language complexity used as the Wikipedia corpus grows and matures. The simple minded spellchecker may be unable to cope with this lexical environment. There could also be other outside and random factors that are contributing so consistently towards this result observed. It is my scientific opinion that another test be devised to incorporate a more holistic approach towards natural language processing.

### A View of the Future

Research that builds on the concept of the spellchecker could lead to the development of more advanced spelling and grammar checking bots. These bots would be continually scraping the Wikipedia corpus to bring the high probability errors to the attention of editors in a central interface. This automated detection could lead to a drastic reduction in errors that may otherwise remain hidden. In this case, an error is considered hidden when a visitor might notice a mistake, but takes no action to correct the error.

If this functionality was combined with the ability to learn through some technique such as a neural network or a decision tree, then the entire Wikipedia corpus could become—to borrow a programming term—unit tested. Ultimately, the goals of the system would be to spur a consistent decline in error rates through time by learning what is considered correct by the masses, and what might be incorrect in new content.

The majority of the work has yet to be done to solve this problem. The stage is certainly set for impressive new developments that change the way we manage an extensive set of unstructured data such as found in Wikipedia.

## Appendices and References

[Download the PDF version of this paper with references](https://jonsview.com/wp-content/uploads/2011/12/Text-Mining-Wikipedia-for-Misspelled-Words.pdf)

[Download Wikipedia Sampler Source Code](https://jonsview.com/wp-content/uploads/2011/12/wikipedia_misspelling_sampler.rb_.txt)

[Download Dictionary Builder Source Code](https://jonsview.com/wp-content/uploads/2011/12/create_dictionary.rb_.txt)

[Download WikiCloth Library Modifications](https://jonsview.com/wp-content/uploads/2011/12/WikiCloth-Library-Modifications.txt)

My spouse and I stumbled over here from a different web page and

thought I might check things out. I like what I see so now i am following

you. Look forward to finding out about your web page

again.

Great job Jon.

There’s a detailed review of your method and results by an administrator of the Wikipedia at: http://en.wikipedia.org/wiki/User:WereSpielChequers/typo_study

Recent increases in Internet penetration throughout various parts of the world have yielded an influx of individuals who speak English as a second language or otherwise speak regional dialects. Considering the sheer number of these folks who are emerging onto the international open source stage, I’m sure that this is also a significant causal factor in both true and false positives alike.

In the case of Wikipedia specifically, the amount of new content describing non-English subjects has steadily grown as a relative percentage over the years. Contributions are increasingly likely to contain references to transliterated names (e.g., Shanti Swarup Bhatnagar Prize for Science and Technology or Razdolnaya River) and undocumented acronyms (e.g., IIT when referring to any Indian Institutes of Technology campus or ITCP when referring to Information Technology Certified Professional).

Technical subjects are another prime example of a related paradigm shift on Wikipedia that even further undermines the effectiveness of contemporary dictionaries. As the project matures, the relative demand for new content describing ordinary subjects decreases, but the relative demand for new content describing novel subjects increases. Scientific articles commonly feature descriptors (e.g., hentriacontylic acid, which may also be referenced as hentriacontanoic acid, henatriacontylic acid, or henatriacontanoic acid) that are still foreign to virtually all existing lexicons.

Eventually, Wiktionary (and other dictionaries) will catch up and provide us with an index of these effectively novel terms. But for now, there’s still probably some ways to try and minimize the effects of this complication. For example, if an index was created by aggregating all of Wikipedia’s article names, the list could be divided up into individual words and then deduped to create a dictionary file that would expand tolerance for many proper names. Many transliterations could also be bypassed with an adaptive filter that learns terms enclosed by ( ) brackets in the first sentence. Further, disregarding all words with, say, ≤3 or ≤4 characters would avoid flagging the vast majority of acronyms.

Covered at: http://meta.wikimedia.org/wiki/Research:Newsletter/2011-12-26

Guys,

He’s not running Watson. Good effort though. Even with a simple approach, you can see that the initial data shows that there appears to be more spelling errors per content.

The main weakness I see is your spelling check

=> could you confirm your results by using a blacklist of commonly misspelled words instead of a whitelist ?

this is a much quicker experiment and your can probably find a blacklist and/or improve it for the most common missplelled words your must have collected (and please tell us about that list : i’d look at it statisctically and at its time evolution)

How about FACT checking over time?

Wrong Method.

If you want to find typos or spelling mistakes, go through the list of most common “extradictorial” items, manually classify those as typo, mistake, foreign language, pronunciation, proper noun, technical term, etc; then check wiki corpus against common typos and mistakes only.

Second compute error rate per word or per character as opposed to per article, as articles grow over time allowing higher chance of naturally occurring typo or mistake.

What would be really cool though is to track true errors to edits that introduced them, figure out whether word in question was added in this edit or changed, then cross-corelate with ip location, user main language, time of day in given timezone, workday vs holiday, method of entry if available (computer vs phone etc), then you might get somewhere with this.

Good luck!

uh … “lead me to ask ” — that would be “led” if you were writing in the past tense … so, were you?

You misspelled “led” (past tense of lead) in the second sentence.

word!

Thanks for the heads up. It is fixed. I was joking with a friend that I apparently failed to run my own spellchecker on my paper!

Apart from more sophisticated detection (like one that accurately detects whether Proper English or that ex-colonial minority dialect is in use for a given article), what about feeding in the “simple english” wikipedia version?

Might also want to try and group articles, see if there isn’t an influence from, say, txtspking youf with possibly associated diminished spelling ability, which one might surmise should show up in the topics with the most spelling errors per amount-of-text unit.

Is the misspelling not a U.S. problem? for example the U.S. has no official language there was a campaign to make English the official language of the United States but it turned out the fastest-growing language in the United States was Spanish. Most of us when we read some of the semi-illiterate nonsense from the U.S. we skip over words and try to put the missing pieces together to make sense of the semi-illiterate nonsense. The more they praise the semi-illiterate the worse it will get. Gotten instead of got! us Instead of U.S. all of us. Capital letters US instead of U.S. ALL OF US. and referring to the U.S. as America the continent instead of the United States ( OF ) America.

Languages change. If your baseline is constant the percentage of spelling errors increases with time. Try using Shakespeare as a baseline, or Byron, and you’ll see higher numbers.

This certainly could become a possible factor over a long time span. I do not think that it is necessarily the cause of the results observed in this test because of other factors though. I included the Wikipedia sister project Wiktionary when I built the English word list, so the database is current, at least so far as contributors to Wiktionary believe.

It occurs to me that another way to view the curve is that jargon takes a while to become a recognized word. You may be seeing a lot of words that are flagged as “non english” today, but will be recognized as english in the future. One way to control for this would be to rerun the experiment using older versions of your word lists (as they would have appeared N years ago) and see if they show the same curve, but with the inflection points N years further in the past.

Just a thought. Interesting study. Well conceived.

Can you compare misspelling rates in other languages? An alternate hypothesis is that over time the wikipedia gains increasing international popularity and international contributors writing in English who do not write English as a first language and hence the spelling quality decreases. There should not, however, be the same increase in spelling mistakes in other languages if there are relatively fewer non-native contributors for non-English articles. One might expect the number of native French speaking contributors contributing in English to far exceed the number of English speakers contributing to the French version, for example.

That sounds like an interesting avenue of exploration to me. The trick with the current rudimentary spell checker is the formulation of the valid word dictionary. It was troublesome enough to come up with an English one [which is an amalgamation from several sources including Wiktionary]. Latin-based languages would probably be better candidates for comparison than others. I say that because I simply don’t know enough about Logograph and other writing systems and how they work.

Support for Unicode characters would be mandatory and a basic understanding of the language would also be needed. For example, I worked on the assumptions that all the characters would be ASCII [an assumption which could be skewing the results], and that punctuation of certain kinds could be stripped in order to spell check root words [e.g. throwing away apostrophe s (‘s)]. Remember that this is a very basic application.

I would be interested in seeing how another Latin-based language compares following similar assumptions. I see that French has the 2nd most number of entries in Wiktionary next to English, so it might be a viable starting point.

As Cellar mentions, the simple english version might also be a worthwhile exploration, and certainly an easier first step than trying to tackle a foreign language [especially for ungifted individuals such as myself stuck on one language].

I think what we all need to realize is that as Wikipedia becomes more and more controlled by obnoxious and ignorant teenagers and 20-somethings still living in Mom’s basement, the more they are driving out the intelligent content writers who also know how to spell. Thus, you end up with a core of writers with such pathetic skills at encyclopedia writing as this meat-head:

http://en.wikipedia.org/w/index.php?title=Mzoli%27s&oldid=158511192

What were you looking for that led you to Mzoli’s and why link to the old article instead of http://en.wikipedia.org/wiki/Mzoli?

Steve, it sounds like you don’t know much about one of Wikipedia’s most disruptive editors: Wikipedia’s co-founder, Jimmy Wales. He’s the proverbial bull in the china shop.

“Understanding of the tests weaknesses will facilitate proper interpretation of the results.”

The main weakness I see is the weakness of the spelling check. You mentioned that the first attempt had a false positive rate that was “very high”, but there’s no mention of the rate for your full dictionary. At the minimum, you should attempt to at least get a ballpark figure on what the false positive rate is — 5%? 20%? 50%? higher? — by taking a random sampling of at least a hundred spelling ‘errors’ and analysing them by hand.

Unequivocally.

The false positive rate is still high, but I do not remember the average figure. I will see about developing a ballpark figure next week. The false positive rate will be heavily dependent on the sample for reasons which I will now discuss.

The main driver behind this high error rate is the use of more complex language. By complex language I mean several things, not just the expansion of the English vocabulary in use [although that might be a component to some small extent given the static dictionary]. I also mean more significant issues, such as:

For these reasons, especially the second bullet above, I feel that a more sophisticated text mining implementation will need to be created. Such an implementation would need to be capable of deconstructing sentences into parts, understanding the relations of grammar and context, and then making a determination on the validity of spelling: be it a non-english word, or intentionally misspelled in the case of a proper noun, and so forth. Such an implementation moves into the realm of natural language processing which was outside the scope of the class that this paper was written for.

While the test failed to provide any conclusive answers, there are still some interesting by-products which can be noted and thus spawn additional hypothesis and tests for others who are also interested in this kind of thing:

Perhaps the largest takeaway from this paper should be a vision for an interesting future where the content of Wikipedia is continuously integrated. It is not enough to simply add grammatical analysis, stemming, etc. to the solution. For a meaningful and lasting outcome, a final solution would need to also incorporate elements of machine learning so that the system learns the commonly accepted language. If we follow this line of thinking what becomes apparent and truly fascinating is the idea that we could obtain a repository of knowledge that becomes future-proof to the inevitable changes in language. As languages evolve over time, old content could be brought up to date at the same time [or rolled back and analyzed for those interested in etymology]. I’ve gotten a bit side tracked here, but you can see where such a project would lead.

Mark my words, within seven years, Wikipedia will be nothing more than 15 million articles of animal-like squeaking, grunting, and neighing.